Transactional outbox

A reliable way to ensure data consistency across systems

Understanding the requirement and the problem

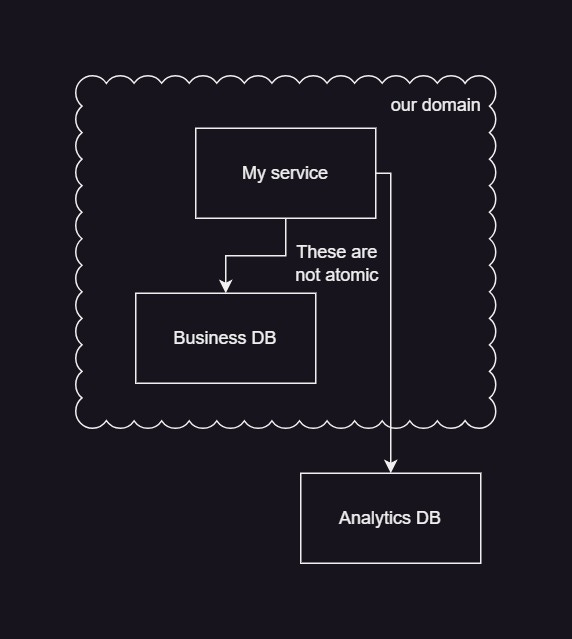

As our systems scale, more feature requirements come in - the tech stack becomes more complex and difficult to maintain. Suppose you have a service that stores some business data but the analytics database wants some domain info (e.g. how was some action performed) along with the data. A naive way to achieve this is to push the data to two different data sources.

updateData(data, action) {

db.startTxn()

updateDb(data)

db.commit()

updateAnalytics(data, action)

}This works but comes with an issue. Since both the update operations are done to different databases over a network call, what if the second write fails? How do you roll back the first change, especially if it was some complex operation? This is known as a dual write problem.

Trying to come up with a solution

Since our service doesn’t own the analytics data, we shouldn’t be the ones writing to their DB. We could try to offload this task to some other service owned by the analytics - push the data to some queue/event stream and they could consume the data.

updateData(data, action) {

db.startTxn()

updateDb(data)

db.commit()

pushAnalyticsDataToKafka(data, action)

}If you didn’t already guess the issue here - this also suffers from the same dual write problem.

Some people may try wrapping the functionality in a DB transaction or reversing the order of updating the data as such.

updateData(data, action) {

db.startTxn()

pushAnalyticsDataToKafka(data, action)

updateDb(data)

db.commit()

}This is even worse - we may have our event pushed to another service but not have the updated data in our system.

The solution

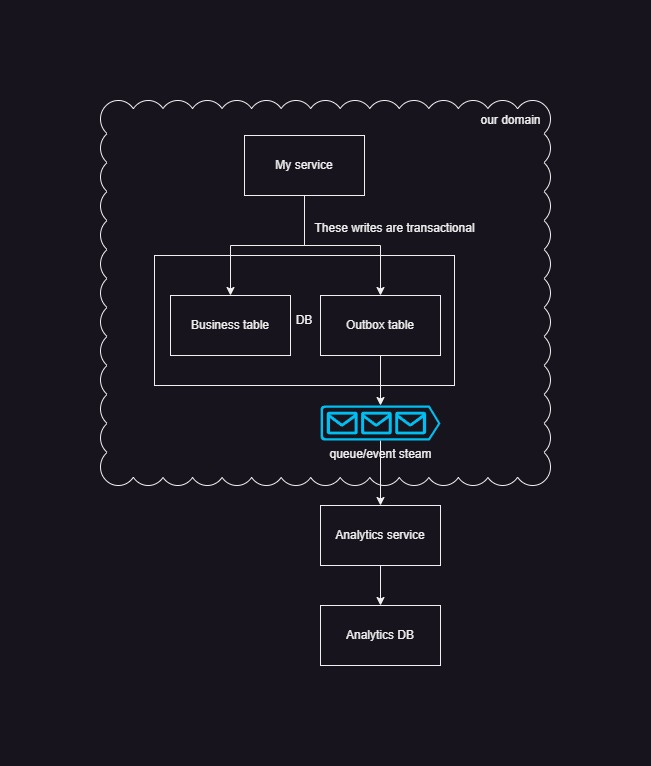

The problem with the solutions that we have discussed is that we are trying to write data to two different databases and we cannot guarantee consistency across them. We need to make our writes atomic in nature. So instead of writing data to two different databases, we write both to the same DB in different tables in a transactional fashion. Our domain event goes to a different table that we call an outbox table with a TTL. We may write the event to the outbox table and attach an event streaming pipeline using CDC(Change Data Capture). These events will be consumed by another microservice which will write the data to the analytics DB. Let’s see the updated code and a graphical demonstration of this solution.

updateData(data, action) {

db.startTxn()

updateDb(data)

updateDbOutbox(data, action)

db.commit()

}Why does this work?

We are writing our data to a single database that can be atomic in nature - this means either our data + event is written completely or not written - no scope of partial success. Once the event is written to the outbox table, our CDC pipeline guarantees an event is generated for the event and is pushed to our queue. This means if our transaction is successful, we have a guaranteed event delivery that can be consumed by other services, even if our microservice goes down.

This pattern comes with some pros and cons as well.

Pros

Guaranteed delivery and reliability: It ensures that the event is delivered even if our microservice goes down.

Scalable: It can be easily scaled up by horizontally scaling the outbox table and the subsequent infra.

Cons

It comes with extra complexity with some increased latency and infra cost as we add more hops to move the same data to the different DB.

A transactional outbox is a great way to guarantee event delivery to maintain consistent systems. Since this comes with extra complexity, cost, and latency, efforts must be made to analyze its effectiveness in your system.

Thanks for the nice write-up.

I was wondering what if a failure occurs while consuming the event from the queue / processing the event?

Then we'll have to undo the changes in the service that created this event right? Should that be also handled in a similar manner?